Amazon S3 File Uploaded Has Empty Bytes

Uploading Files to Amazon S3 With a Rails API Backend and Javascript Frontend

This guide will walk you lot through a method to integrate S3 hosting with Track-as-an-API. I will also talk about how to integrate with the frontend. Note while some of the setup is focused on Heroku, this is applicable for whatsoever Runway API backend. In that location are many short guides out there, but this is intended to bring everything together in a clear manner. I put troubleshooting tips at the finish, for some of the errors I ran into.

For this guide, I had a Rails API app in one working directory, and a React app in a dissimilar directory. I volition assume you already know the basics of connecting your frontend to your backend, and assume that you know how to run them locally. This guide is quite long, and may take yous a few hours to follow forth with. Delight accept breaks.

Background

Nosotros will be uploading the file straight from the frontend. I reward of this is that it saves united states on big requests. If we uploaded to the backend, then had the backend send it to S3, that volition be two instances of a potentially large request. Another advantage is because of Heroku'south setup: Heroku has an "ephemeral filesystem." Your files may remain on the arrangement briefly, but they volition ever disappear on a system cycle. Y'all tin endeavor to upload files to Heroku then immediately upload them to S3. Nevertheless, if the filesystem cycles in that time, y'all will upload an incomplete file. This is less relevant for smaller files, but we volition play information technology safe for the purposes of this guide.

Our backend will serve two roles: it will relieve metadata near the file, and handle all of the authentication steps that S3 requires. Information technology volition never touch the actual files.

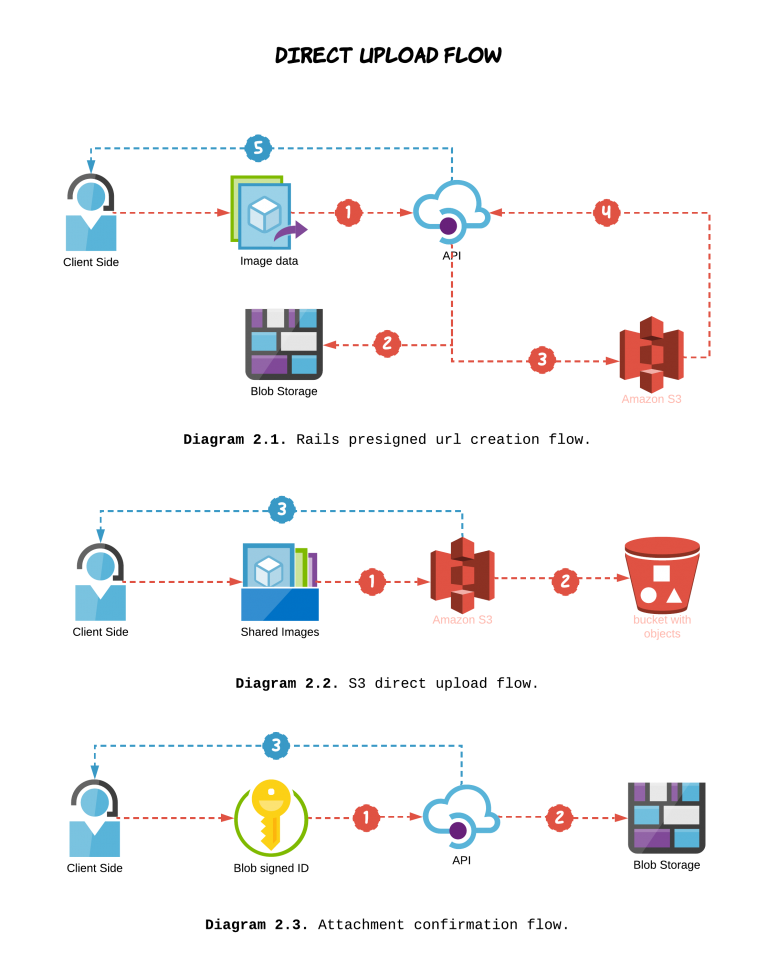

The period will expect like this:

- The frontend sends a request to the Rails server for an authorized url to upload to.

- The server (using Agile Storage) creates an authorized url for S3, then passes that back to the frontend.

- The frontend uploads the file to S3 using the authorized url.

- The frontend confirms the upload, and makes a request to the backend to create an object that tracks the needed metadata.

|

| Steps 1 and 2 are in diagram 2.one. Steps 3 and 4 are diagrams 2.2 and 2.3, respectively. |

| Epitome taken from Applaudo Studios |

Setting up S3

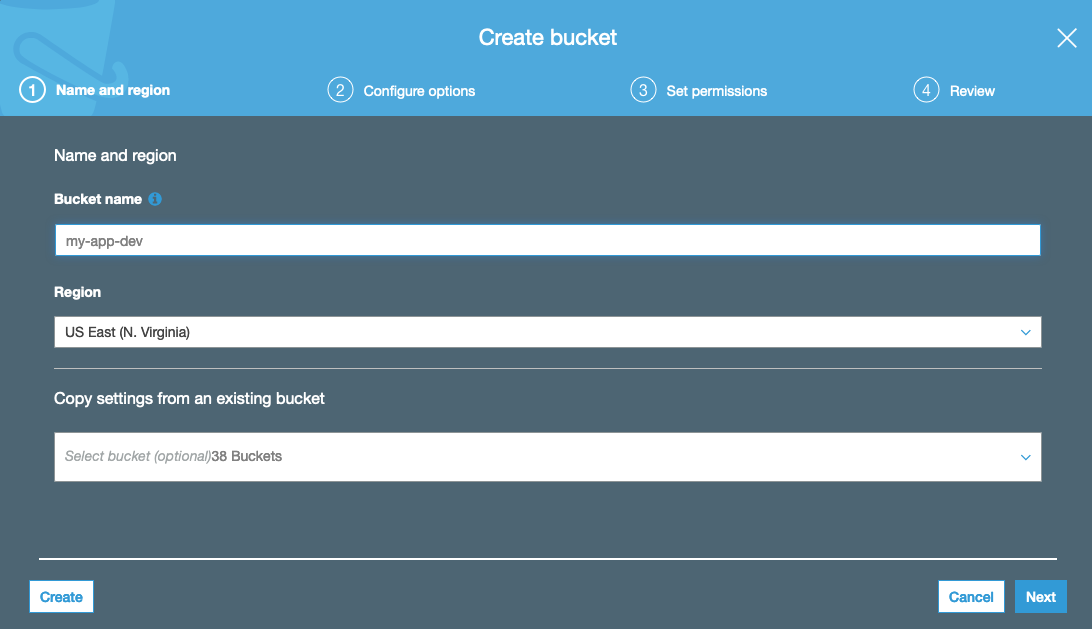

First, we will set up the S3 resources nosotros desire. Create two S3 buckets, prod and dev. You can allow everything be default, but have note of the bucket region. You lot will demand that later.

|

| What you run across in S3 when making a new bucket. |

Side by side, nosotros will gear up up Cross-Origin Resources Sharing (CORS). This will allow you to make Postal service & PUT requests to your bucket. Go into each saucepan, Permissions -> CORS Configuration. For now, we will just use a default config that allows everything. We volition restrict information technology later.

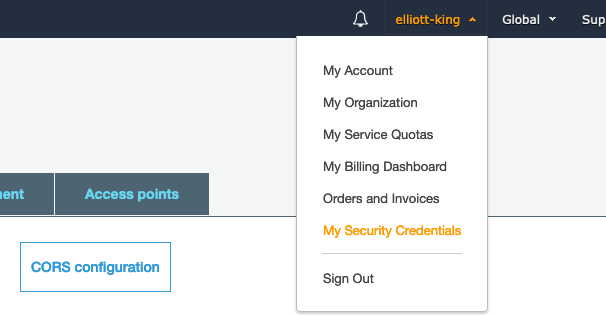

<?xml version="1.0" encoding="UTF-eight"?> <CORSConfiguration xmlns= "http://s3.amazonaws.com/dr./2006-03-01/" > <CORSRule> <AllowedOrigin>*</AllowedOrigin> <AllowedMethod>Go</AllowedMethod> <AllowedMethod>Postal service</AllowedMethod> <AllowedMethod>PUT</AllowedMethod> <AllowedHeader>*</AllowedHeader> </CORSRule> </CORSConfiguration> Adjacent, we will create some security credentials to let our backend to do fancy things with our saucepan. Click the dropdown with your account proper name, and select My Security Credentials. This volition have you lot to AWS IAM.

|

| Accessing "My Security Credentials" |

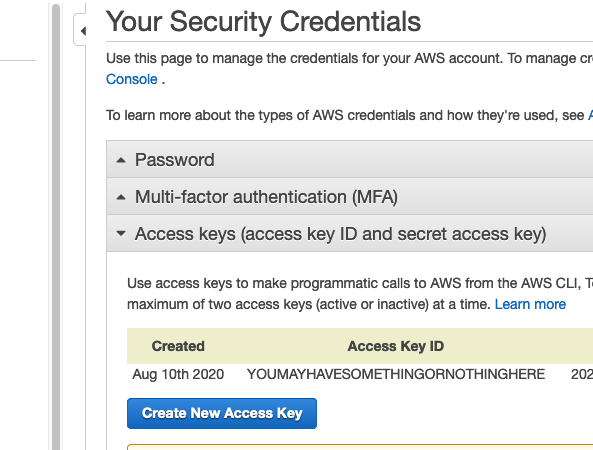

Once in the Identity and Access Management panel, you should go to the access keys section, and create a new access key.

|

| Location of AWS access keys |

Hither, it will create a key for you lot. Information technology volition never show you the hugger-mugger again, so make sure you save these values in a file on your figurer.

Rail API Backend

Again, I assume yous know how to create a basic Track API. I will be attaching my file to a user model, but you can attach information technology to whatever you want.

Surround Variables

Add 2 gems to your Gemfile: gem 'aws-sdk-s3' and precious stone 'dotenv-rails', then bundle install. The start gem is the S3 software development kit. The 2d gem allows Rails to use a .env file.

The access key and region (from AWS) are needed within Rails. While locally developing, nosotros volition pass these values using a .env file. While on Heroku, nosotros tin can set the values using heroku config, which we will explore at the end of this guide. We will not exist using a Procfile. Create the .env file at the root of your directory, and be certain to add together it to your gitignore. You don't want your AWS business relationship secrets catastrophe up on Github. Your .env file should include:

AWS_ACCESS_KEY_ID=YOURACCESSKEY AWS_SECRET_ACCESS_KEY=sEcReTkEyInSpoNGeBoBCaSe S3_BUCKET=your-app-dev AWS_REGION=your-region-1 Storage Setup

Run rails active_storage:install. Active Storage is a library that helps with uploads to various cloud storages. Running this control will create a migration for a table that volition handle the files' metadata. Make sure to rails db:migrate.

Next, we will modify the files that keep rails of the Active Storage surround. There should be a config/storage.yml file. We will add together an amazon S3 storage pick. Its values come from our .env file.

amazon : service : S3 access_key_id : <%= ENV['AWS_ACCESS_KEY_ID'] %> secret_access_key : <%= ENV['AWS_SECRET_ACCESS_KEY'] %> region : <%= ENV['AWS_REGION'] %> bucket : <%= ENV['S3_BUCKET'] %> Side by side, go to config/enviroments, and update your product.rb and evolution.rb. For both of these, change the Agile Storage service to your newly added one:

config . active_storage . service = :amazon Finally, nosotros need an initializer for the AWS S3 service, to set it upward with the admission key. Create a config/initializers/aws.rb, and insert the following code:

require 'aws-sdk-s3' Aws . config . update ({ region: ENV [ 'AWS_REGION' ], credentials: Aws :: Credentials . new ( ENV [ 'AWS_ACCESS_KEY_ID' ], ENV [ 'AWS_SECRET_ACCESS_KEY' ]), }) S3_BUCKET = Aws :: S3 :: Resource . new . bucket ( ENV [ 'S3_BUCKET' ]) We are now ready to store files. Next we will talk nearly the Track model and controller setup.

Model

For my app, I am uploading user resumes, for the user model. Yous may exist uploading images or other files. Feel gratis to change the variable names to whatsoever you like.

In my user.rb model file, we need to attach the file to the model. We will besides create a helper method that shares the file'south public URL, which will become relevant later.

grade User < ApplicationRecord has_one_attached :resume def resume_url if resume . attached? resume . hulk . service_url end end terminate Brand sure that the model does not take a corresponding cavalcade in its table. There should be no resume column in my user'due south schema.

Direct Upload Controller

Side by side nosotros will create a controller to handle the hallmark with S3 through Agile Storage. This controller will expect a Post request, and will return an object that includes a signed url for the frontend to PUT to. Run rails g controller direct_upload to create this file. Additionally, add together a road to routes.rb:

post '/presigned_url' , to: 'direct_upload#create' The contents of the direct_upload_controller.rb file can exist institute here.

The actual magic is handled by the ActiveStorage::Hulk.create_before_direct_upload! function. Everything else just formats the input or output a lilliputian fleck. Take a wait at blob_params; our frontend will exist responsible for determining those.

Testing

At this betoken, information technology might exist useful to verify that the endpoint is working. Y'all can examination this functionality with something like curl or Postman. I used Postman.

Run your local server with rails s, then you lot tin test your direct_upload#create endpoint by sending a POST request. There are a few things you lot will demand:

- On a Unix machine, you can get the size of a file using

ls -l. - If you have a different type of file, make sure to alter the

content_typevalue. - S3 also expects a "checksum", so that information technology tin verify that it received an uncorrupted file. This should be the MD5 hash of the file, encoded in base64. Yous tin can get this by running

openssl md5 -binary filename | base64.

Your POST request to /presigned_url might look like this:

{ "file" : { "filename" : "test_upload" , "byte_size" : 67969 , "checksum" : "VtVrTvbyW7L2DOsRBsh0UQ==" , "content_type" : "application/pdf" , "metadata" : { "bulletin" : "active_storage_test" } } } The response should have a pre-signed URL and an id:

{ "direct_upload" : { "url" : "https://your-s3-bucket-dev.s3.amazonaws.com/uploads/uuid?some-really-long-parameters" , "headers" : { "Content-Type" : "application/pdf" , "Content-MD5" : "VtVrTvbyW7L2DOsRBsh0UQ==" } }, "blob_signed_id" : "eyJfcmFpbHMiOnsibWVzc2FnZSI6IkJBaHBSQT09IiwiZXhwIjpudWxsLCJwdXIiOiJibG9iX2lkIn19--8a8b5467554825da176aa8bca80cc46c75459131" } The response direct_upload.url should have several parameters attached to it. Don't worry too much about it; if there was something incorrect you would merely get an fault.

| 10-Amz-Algorithm |

| X-Amz-Credential |

| X-Amz-Date |

| X-Amz-Expires |

| Ten-Amz-SignedHeaders |

| Ten-Amz-Signature |

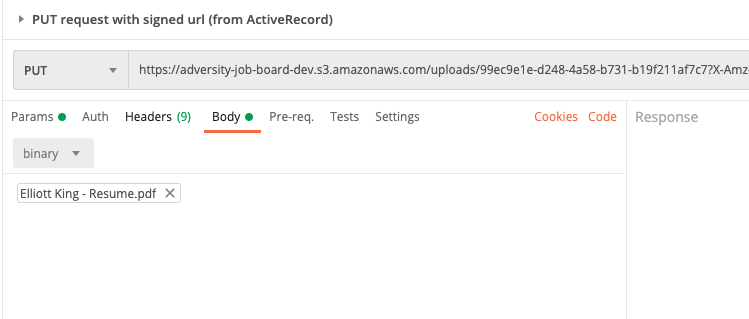

Your direct upload at present has an expiration of 10 minutes. If this looks correct, we can use the direct_upload object to make a PUT request to S3. Utilize the same url, and make sure you include the headers. The body of the asking volition be the file you are looking to include.

|

| What the PUT looks like in Postman. Headers not shown. |

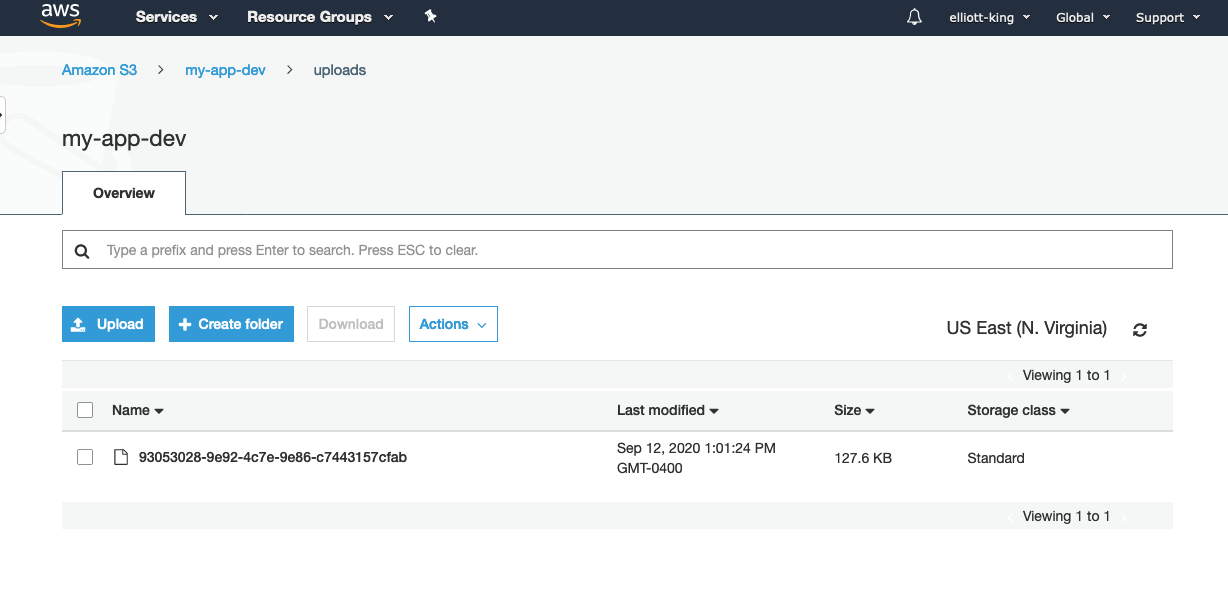

You should go a simple empty response with a lawmaking of 200. If yous go to the S3 saucepan in the AWS console, yous should meet the folder and the file. Annotation that you can't actually view the file (you lot can only view its metadata). If you try to click the "Object URL", it volition tell you Access Denied. This is okay! We don't take permission to read the file. Earlier, in my user.rb model, I put a helper function that uses Active Storage to get a public URL. Nosotros will have a expect at that in a bit.

|

| The uploaded file |

User Controller

If you recollect our flow:

- The frontend sends a request to the server for an authorized url to upload to.

-

The server (using Active Storage) creates an authorized url for S3, then passes that back to the frontend.Done. - The frontend uploads the file to S3 using the authorized url.

- The frontend confirms the upload, and makes a request to the backend to create an object that tracks the needed metadata.

The backend still needs one chip of functionality. It needs to exist able to create a new record using the uploaded file. For instance, I am using resume files, and attaching them to users. For a new user creation, it expects a first_name, last_name, and electronic mail. The resume volition take the course of signed_blob_id we saw earlier. Active Storage just needs this ID to connect the file to your model instance. Here is what my users_controller#create looks like, and I also fabricated a gist:

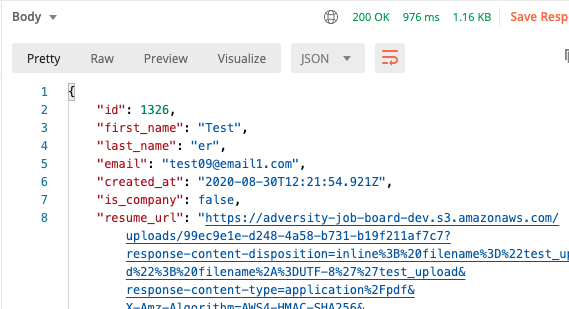

def create resume = params [ :pdf ] params = user_params . except ( :pdf ) user = User . create! ( params ) user . resume . attach ( resume ) if resume . present? && !! user render json: user . as_json ( root: false , methods: :resume_url ). except ( 'updated_at' ) end individual def user_params params . permit ( :electronic mail , :first_name , :last_name , :pdf ) terminate The biggest new thing is the resume.attach call. Also annotation that we are returning the json of the user, and including our created resume_url method. This is what allows united states to view the resume.

Your params may expect different if your model is dissimilar. Nosotros tin again examination this with Postman or curl. Here is a json Postal service asking that I would make to the /users endpoint:

{ "email" : "test08@email1.com" , "first_name" : "Examination" , "last_name" : "er" , "pdf" : "eyJfcmFpbHMiOnsibWVzc2FnZSI6IkJBaHBLdz09IiwiZXhwIjpudWxsLCJwdXIiOiJibG9iX2lkIn19--3fe2ec7e27bb9b5678dd9f4c7786032897d9511b" } This is much like a normal user creation, except we call attach on the file ID that is passed with the request. The ID is from the response of our first request, the blob_signed_id field. You lot should become a response that represents the user, simply has a resume_url field. You tin can follow this public url to see your uploaded file! This url comes from the blob.service_url nosotros included in the user.rb model.

|

| The response, containing the newly created user. |

If this is all working, your backend is probably all set up.

The Javascript Frontend

Remember our overall request period. If we only consider the requests that the frontend performs, it will look like this:

- Make POST request for signed url.

- Brand PUT request to S3 to upload the file.

- Make POST to

/usersto create new user.

We take already tested all of this using curl/Postman. Now it merely needs to be implemented on the frontend. I am also going to presume you know how to become a file into Javascript from a reckoner. <input> is the simplest method, but there are plenty of guides out in that location.

The only difficult part of this is calculating the checksum of the file. This is a little weird to follow, and I had to guess-and-check my way through a scrap of this. To starting time with, nosotros will npm install crypto-js. Crypto JS is a cryptographic library for Javascript.

Notation: if you are using vanilla Javascript and can't use npm, here are some directions to import it with a CDN. You volition need:

-

rollups/md5.js -

components/lib-typedarrays-min.js -

components/enc-base64-min.js

So, we will read the file with FileReader earlier hashing it, according to the following code. Here is a link to the corresponding gist.

import CryptoJS from ' crypto-js ' // Note that for larger files, you may want to hash them incrementally. // Taken from https://stackoverflow.com/questions/768268/ const md5FromFile = ( file ) => { // FileReader is outcome driven, does not return promise // Wrap with promise api and then we can telephone call west/ async wait // https://stackoverflow.com/questions/34495796 return new Hope (( resolve , decline ) => { const reader = new FileReader () reader . onload = ( fileEvent ) => { allow binary = CryptoJS . lib . WordArray . create ( fileEvent . target . result ) const md5 = CryptoJS . MD5 ( binary ) resolve ( md5 ) } reader . onerror = () => { turn down ( ' oops, something went wrong with the file reader. ' ) } // For some reason, readAsBinaryString(file) does not work correctly, // so we will handle it as a word assortment reader . readAsArrayBuffer ( file ) }) } export const fileChecksum = async ( file ) => { const md5 = wait md5FromFile ( file ) const checksum = md5 . toString ( CryptoJS . enc . Base64 ) return checksum } At the stop of this, nosotros will have an MD5 hash, encoded in base64 (just like we did above with the last). Nosotros are virtually done! The only thing we demand are the bodily requests. I will paste the code, but here is a link to a gist of the JS request code.

import { fileChecksum } from ' utils/checksum ' const createPresignedUrl = async ( file , byte_size , checksum ) => { permit options = { method : ' Postal service ' , headers : { ' Accept ' : ' application/json ' , ' Content-Type ' : ' awarding/json ' , }, body : JSON . stringify ({ file : { filename : file . proper name , byte_size : byte_size , checksum : checksum , content_type : ' application/pdf ' , metadata : { ' message ' : ' resume for parsing ' } } }) } let res = look fetch ( PRESIGNED_URL_API_ENDPOINT , options ) if ( res . status !== 200 ) return res return await res . json () } export const createUser = async ( userInfo ) => { const { pdf , email , first_name , last_name } = userInfo // To upload pdf file to S3, nosotros demand to practise three steps: // ane) request a pre-signed PUT asking (for S3) from the backend const checksum = look fileChecksum ( pdf ) const presignedFileParams = await createPresignedUrl ( pdf , pdf . size , checksum ) // 2) send file to said PUT request (to S3) const s3PutOptions = { method : ' PUT ' , headers : presignedFileParams . direct_upload . headers , body : pdf , } let awsRes = await fetch ( presignedFileParams . direct_upload . url , s3PutOptions ) if ( awsRes . status !== 200 ) return awsRes // 3) confirm & create user with backend let usersPostOptions = { method : ' Mail ' , headers : { ' Have ' : ' application/json ' , ' Content-Type ' : ' awarding/json ' }, body : JSON . stringify ({ email : e-mail , first_name : first_name , last_name : last_name , pdf : presignedFileParams . blob_signed_id , }) } let res = await fetch ( USERS_API_ENDPOINT , usersPostOptions ) if ( res . status !== 200 ) return res render await res . json () } Note that you need to provide the two global variables: USERS_API_ENDPOINT and PRESIGNED_URL_API_ENDPOINT. Also note that the pdf variable is a Javascript file object. Once more, if you are not uploading pdfs, be sure to change the appropriate content_type.

You at present take the required Javascript to brand your application work. Just attach the createUser method to form inputs, and make sure that pdf is a file object. If you open the Network tab in your browser devtools, you should run into three requests fabricated when you lot call the method: 1 to your API's presigned_url endpoint, one to S3, and i to your API'due south user create endpoint. The terminal one will likewise return a public URL for the file, so you can view it for a express time.

Final Steps and Cleanup

S3 Buckets

Make certain your prod app is using a different bucket from your development. This is then you tin can restrict its CORS policy. Information technology should only accept PUT requests from one source: your production frontend. For instance, hither is my production CORS policy:

<?xml version="1.0" encoding="UTF-8"?> <CORSConfiguration xmlns= "http://s3.amazonaws.com/physician/2006-03-01/" > <CORSRule> <AllowedOrigin>https://myfrontend.herokuapp.com</AllowedOrigin> <AllowedMethod>POST</AllowedMethod> <AllowedMethod>PUT</AllowedMethod> <AllowedMethod>GET</AllowedMethod> <AllowedHeader>*</AllowedHeader> </CORSRule> </CORSConfiguration> You don't need to enable CORS for the communication between Rails and S3, because that is not technically a asking, it is Active Storage.

Heroku Production Settings

You may take to update your Heroku prod environment. After you push your code, don't forget to heroku run rails db:drift. You will besides need to brand certain your environs variables are right. You lot can view them with heroku config. Yous can set them by going to the app's settings in the Heroku dashboard. You lot tin also set up them with heroku config:set AWS_ACCESS_KEY_ID=xxx AWS_SECRET_ACCESS_KEY=yyy S3_BUCKET=bucket-for-app AWS_REGION=my-region-one.

Public Viewing of Files

The public URL you lot receive to view the files is temporary. If you want your files to be permanently publicly viewable, you will demand to take a few more than steps. That is outside the realm of this guide.

Some Troubleshooting

Here are some errors I ran into while building this guide. Information technology is not comprehensive, but may help you.

Problems with server initialization: make sure the names in your .env files match the names where you admission them.

Fault: missing host to link to for the showtime request. In my example, this meant I had not put :amazon as my Agile Storage source in development.rb.

StackLevelTooDeep for last request. I had this consequence when calling users_controller#create because I had not removed the "resume" field from my schema. Make sure your database schema does non include the file. That should merely be referenced in the model with has_one_attached.

AWS requests neglect subsequently changing CORS: make certain there are no trailing slashes in your URL within the CORS XML.

Debugging your checksum: this is a hard one. If y'all are getting an error from S3 maxim that the computed checksum is non what they expected, this ways there is something wrong with your calculation, and therefore something wrong with the Javascript you received from here. If y'all double cheque the code you lot copied from me and can't find a difference, you may accept to effigy this out on your own. For Javascript, you tin check the MD5 value by calling .toString() on it with no arguments. On the command line, you can drop the --binary flag.

Sources and References

Much of this was taken from Arely Viana's blog post for Applaudo Studios. I linked the code together, and figured out how the frontend would look. A huge shout-out to them!

Here are some other resource I found useful:

- Heroku'due south guide for S3 with Rails - this is non for Runway as an API, merely it does talk about surround setup

- The code for Arely's guide - also has some example JSON requests

- Rails Agile Storage Overview

- Uploading to S3 with JS - this as well uses AWS Lambda, with no backend

Source: https://elliott-king.github.io/2020/09/s3-heroku-rails/

0 Response to "Amazon S3 File Uploaded Has Empty Bytes"

Post a Comment